Imagine a world where humans are microchipped, their every move tracked and recorded. It may sound like something out of a science fiction novel, but the reality is that microchipping has already become a part of our lives. From tracking lost pets to monitoring the health of livestock, microchips have revolutionized the way we interact with animals. But when did this technology first make its way into the human world? In this article, we will explore the fascinating history of human microchipping, tracing its origins and the ways in which it has evolved over time. So, sit back, relax, and let’s embark on a journey through the intriguing world of human microchipping.

The Concept of Microchipping

Microchipping, a revolutionary technology that has transformed various industries, including animal welfare and healthcare, is a concept that holds immense potential. By implanting tiny microchips into living organisms, various applications can be achieved, ranging from tracking animals to enhancing medical monitoring in humans. This article explores the journey of microchipping, from its invention to its impact on society.

The Invention of Microchips

The inception of microchips can be traced back to the late 1950s when scientists began developing integrated circuits, heralding the birth of modern computing technology. The engineer Jack Kilby is credited with the creation of the first microchip in 1958, which eventually led to the development of smaller and more advanced microchips. These tiny electronic devices played a pivotal role in the evolution of various technologies, setting the stage for the widespread use of microchipping.

Application of Microchips in Animals

One of the earliest and most significant applications of microchips can be seen in the field of animal welfare. Microchipping has become a common practice in many countries to identify and track animals, particularly pets. By implanting a small microchip under the skin, animals can be easily identified and reunited with their owners if they go missing. This technology has revolutionized the way animal shelters, veterinarians, and individuals work together to ensure the safety and well-being of beloved pets.

Exploration of Microchipping in Humans

As microchipping proved to be highly effective and beneficial in the animal world, researchers and innovators began exploring its potential applications in humans. Although the concept of human microchipping received mixed reactions initially, advancements in technology and the growing demand for improved healthcare and security systems paved the way for further exploration.

Pioneering Technologies in Microchipping

Early RFID Technology

The development of radio frequency identification (RFID) technology was a significant milestone in the field of microchipping. In the 1970s, researchers started utilizing RFID tags to track and identify assets such as goods and vehicles. These early RFID systems relied on external readers to communicate with the microchips, limiting their applications to short-range tracking.

Radio Frequency Identification (RFID) Chips

The advancement of RFID chips brought about significant improvements in microchipping technology. RFID chips contain an integrated circuit and an antenna, allowing for efficient communication with external readers. These chips can store and transmit data, enabling various applications such as inventory management and access control systems.

Implantable Microchips

Implantable microchips emerged as a groundbreaking development in the world of microchipping. These tiny devices, often the size of a grain of rice, are embedded under the skin to provide long-term identification and tracking. Implantable microchips eliminate the need for external readers, as they can be scanned using specialized handheld devices, making them a potential game-changer in fields like healthcare and security.

The Beginning of Human Microchipping

VeriChip Corporation and VeriChip Implants

In the early 2000s, the VeriChip Corporation, a subsidiary of Applied Digital Solutions, made headlines with their groundbreaking VeriChip implants. Designed to be implanted in humans, these chips aimed to provide unique identification and medical information access. VeriChip implants garnered attention for their potential use in emergency situations, where quick access to medical records could save lives.

FDA Approval of VeriChip Implants

The FDA’s approval of VeriChip implants in 2004 marked a significant milestone in the history of human microchipping. The agency believed that the benefits of this technology outweighed the potential risks, paving the way for VeriChip implants to be marketed and used in the United States. However, the approval also triggered ethical debates and concerns regarding privacy and personal autonomy.

First Documented Human Microchip Implant

Case of Kevin Warwick

Kevin Warwick, a British scientist, became the first documented individual to have a microchip implanted in his body voluntarily. In 1998, Warwick underwent a procedure to insert a microchip into his arm, aiming to explore the possibilities of human-machine integration. This groundbreaking experiment sparked both curiosity and controversy, fueling debates around the merging of technology and the human body.

The First Known Human Cyborg

Kevin Warwick’s experience with the implanted microchip led to him being dubbed the world’s first known human cyborg. The implant allowed him to interact with various computer systems and control electronic devices using brain signals and body movements. While his experiment raised significant ethical questions, it also opened up avenues for further research and exploration in the realm of human microchipping.

Ethical and Privacy Concerns

Debates Surrounding Human Microchipping

The emergence of human microchipping has sparked intense debates within society. Various ethical considerations, such as personal autonomy, informed consent, and potential abuse of technology, have been at the forefront of these discussions. Supporters argue that microchipping can offer benefits like improved medical care and enhanced security, while critics raise concerns about privacy, individual rights, and the potential for misuse.

Privacy Rights and Surveillance Concerns

Privacy is a crucial aspect when discussing human microchipping. Critics express concerns that widespread adoption of microchips could lead to increased surveillance and invasion of privacy. Questions regarding who has access to the information stored on microchips, how it is used, and the potential for hacking and data breaches raise important considerations regarding personal data security and individual freedom.

Current Applications of Human Microchipping

Medical and Healthcare Monitoring

One of the most significant current applications of human microchipping exists in the medical and healthcare sectors. Microchips implanted in individuals can provide crucial medical information, such as allergies, blood type, and medical history, which can be invaluable during emergencies. Additionally, microchips can be used for remote monitoring of vital signs, medication compliance, and even drug delivery systems.

Secure Identification Systems

Microchips offer the potential for highly secure identification systems, replacing traditional forms of identification such as passports and ID cards. By storing biometric data securely, microchips can facilitate seamless and reliable identification, providing enhanced security and helping combat identity theft and fraud.

Implantable Biometric Devices

Innovation in microchip technology has paved the way for implantable biometric devices. These advanced microchips can record and transmit biometric data, such as heart rate, body temperature, and even brain activity. Such devices hold immense potential in various fields, including healthcare, sports performance monitoring, and assistive technologies for individuals with disabilities.

Legal Regulations and Acceptance

Legislation on Human Microchipping

The exploration of human microchipping has prompted governments to address legal and regulatory considerations. Various countries have implemented laws and regulations to govern the use of microchips in humans, aiming to strike a balance between technological advancements and protecting individual rights. Key areas of focus include informed consent, data protection, and the prevention of forced microchipping.

Public Perception and Acceptance

Public perception and acceptance of human microchipping remain diverse and influenced by individual beliefs and cultural contexts. While some individuals embrace the potential benefits of this technology, others harbor concerns over privacy and ethical implications. Public awareness campaigns and open dialogue are crucial in fostering understanding and enabling informed decisions regarding the use of microchips in humans.

Future Possibilities and Controversies

Advancements in Microchip Technology

The future of microchipping holds exciting possibilities as technology continues to advance. Smaller, more powerful microchips with enhanced capabilities are being developed, enabling sophisticated applications in various fields. Wireless connectivity, improved power efficiency, and integration with artificial intelligence are just some of the potential advancements on the horizon.

Potential Benefits and Risks

As human microchipping evolves, the potential benefits and risks need to be carefully evaluated. Benefits such as improved healthcare, enhanced security, and increased convenience must be balanced with the potential risks associated with privacy breaches, unauthorized access to personal data, and the long-term impact on individual autonomy. Ongoing research and ethical considerations will play a crucial role in navigating the future of human microchipping.

Implications for Society

Impact on Privacy and Individual Autonomy

The integration of microchips into human beings raises important questions about personal privacy and individual autonomy. Striking a balance between convenience, security, and personal rights is vital to ensure that microchipping technology aligns with societal values. Robust privacy laws, transparent data handling practices, and informed consent processes are essential to protect individual rights in an increasingly interconnected world.

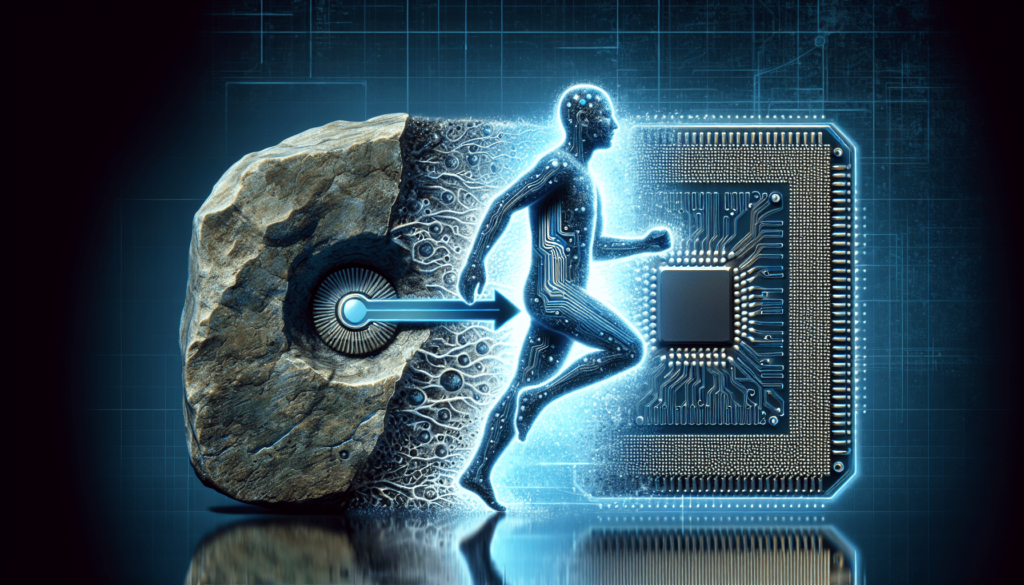

Integration of Humans and Technology

As human microchipping becomes more commonplace, it opens avenues for the integration of humans and technology on an unprecedented scale. This integration has the potential to enhance human capabilities, improve medical treatments, and revolutionize how individuals interact with machines and systems around them. Society must navigate this technological evolution while addressing concerns and ensuring responsible and ethical implementation.

Conclusion

The concept of microchipping has come a long way since its invention and application in animals. From pioneering technologies like RFID to the first documented human microchip implant, the journey of human microchipping has been both fascinating and controversial. As the technology continues to evolve, it is crucial to navigate its implications with caution, considering ethical concerns, privacy rights, and the potential benefits and risks. The ongoing evolution of human microchipping has the potential to shape the future, blending humans and technology in ways previously unimaginable. With the right balance of regulations, public awareness, and responsible development, microchipping could lead to significant advancements in healthcare, security, and the overall well-being of individuals and society as a whole.